Prompt Refiner: Building an AI Tool That Lives on My Laptop

I started this project inside n8n — the no-code workflow automation tool I use frequently — curious if I could build something that felt like a real product using AI, but on my own terms. Prompt engineering was already part of my workflow, but I wanted to go further: could I create a tool that improves prompts, adapts them to different platforms, and runs entirely on my local machine?

What began as a quick three-node experiment in n8n — just enough to test the logic with OpenAI — quickly became something I wanted to shape into a proper, usable tool. Not just another "generate me a quote" gimmick, but a real refinement system.

So I decided to build Prompt Refiner — a tool that transforms messy prompts into cleaner, better-structured ones, refined by tone and platform. The idea was to help people write better prompts for ChatGPT, Midjourney, or any AI system, by making sure their intent was communicated clearly.

I was already running n8n locally. (If you're curious how, I wrote a blog post on how to run n8n on your local machine). That meant the infrastructure for testing a full loop — UI > API > AI > UI — was basically at my fingertips.

Building the Local System

The frontend came first. I set up a lightweight UI using vanilla HTML, CSS, and JavaScript. It includes a prompt input field, dropdowns for tone and platform, and a response area. This part lives on GitHub Pages.

Then I wrote my own backend using Node.js and Express. This backend accepts POST requests, formats the prompt into a system/user message format for OpenAI, and returns the result. All of it runs entirely on my local machine — no cloud deployment, no external hosting.

I used a basic API endpoint (/api/refine) and configured the backend to accept CORS requests from GitHub Pages. That was a journey in itself. Before this, I kind of accepted CORS as one of those annoying things you copy-paste around. But this time, I decided to actually understand what it is and how to control it. And I did.

Once the API started talking to the frontend, and OpenAI began responding with refined prompts, the whole system clicked into place. I embedded the GitHub Pages version directly into my Squarespace site using an iframe.

The Tech Behind It

Frontend: HTML, CSS, JS on GitHub Pages

Backend: Node.js + Express, running locally

AI: OpenAI API, with tone and platform-aware instructions

Middleware: I manually handled the routing, refining logic, formatting, and basic security in the backend

Integration: Squarespace iframe

What’s Going On in n8n?

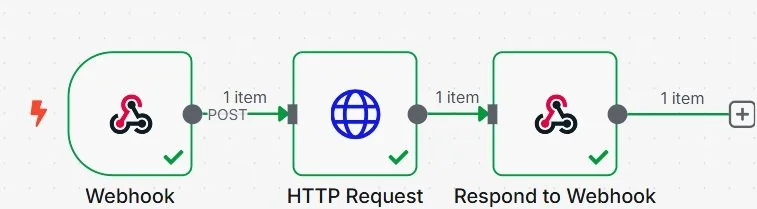

While the current demo doesn't use n8n actively, the original idea — and even the system’s first working version — was built using a simple three-node flow:

Webhook (receive prompt)

HTTP Request (send to my local API)

Response (return the refined prompt)

Behind the scenes, the actual refinement happens on my laptop — through JavaScript that formats and sends requests to OpenAI. But n8n was where I proved the idea first.

What I Took From This

I can build and ship a full AI tool without deploying a single cloud backend

Prompt engineering isn't just about the input — it's about the framing

Learning CORS properly saved me more time than I expected

Small tools feel bigger when they work end-to-end

Try It (If I’m Online)

Note: If it’s not working, I may have spilled coffee on my keyboard. That’s the charm of local servers.

This project helped me practice prompt engineering, solidify my understanding of backend/frontend integration, and demystify CORS once and for all. The tool is complete and functional, but it might still evolve. I’d love to add options for prompt type, export features, and a hosted version for public use.

For now, Prompt Refiner lives quietly on my machine. But it works, and it reflects exactly how I like to build: minimal, focused, real.